Moondream 3: The Art of Visual Reasoning, No Data Center Required

Moondream 3 is an open-source visual language model built to provide detailed visual understanding from a small operational footprint. The team at Moondream AI, directed by former AWS engineer Vikhyat Korrapati, released the latest preview in September 2025. This model is made for developers who need to add computer vision into applications without a dependency on costly, cloud-based infrastructure. It is a piece of vision ai tech aimed at fields like robotics, home automation, and mobile app development, where on-device processing is a priority.

The central purpose of Moondream 3 is to make vision AI more accessible. It presents an alternative to large, resource-heavy models by offering a lightweight, private, and efficient tool that can run on consumer-grade hardware, including laptops and Raspberry Pi’s. By removing the requirement for large datasets, difficult training procedures, and a constant internet connection, this AI agent supports the creation of intelligent edge computing applications.

Best Use Cases for Moondream 3

- Home Automation Builders: For users creating smart home systems, Moondream 3 addresses the privacy concerns of cloud-based camera analysis. It allows you to run a vision model locally to answer specific questions about your home, such as “Did I leave the lights on in the kitchen?” or “Is there a package on the front porch?” The primary benefit is complete data privacy and the absence of subscription fees.

- Robotics Engineers and Hobbyists: This model gives robots and drones a lightweight, low-power method for visually interpreting their environment. Instead of training separate models for different objects, an engineer can use natural language prompts like “Find the red ball” or “Is the path ahead clear?” This flexibility is useful for prototyping and developing autonomous systems with limited budgets.

- QA and Automation Developers: In software testing, Moondream 3 can visually identify and check UI elements on a screen. It helps with the problem of brittle selectors in automation scripts by understanding prompts like “Locate the ‘Submit’ button” or “Is an error message displayed in a dialog box?” This makes UI automation more dependable and easier to maintain.

- Retail and Logistics Analysts: For inventory management, the tool can be deployed on edge devices to analyze camera feeds. It can answer questions like “How many boxes are on this shelf?” or generate a JSON list of products visible in an image. This provides a low-cost method for real-time stock monitoring without the expense of high-volume API calls.

Runs on Most Hardware: The small footprint of Moondream 3 (~1GB) allows it to operate on everything from high-end GPUs to low-power CPUs and Raspberry Pi's.

Free and Open Source: The Apache 2.0 license permits free use and modification for any purpose, including commercial applications.

High Cost-Effectiveness: By running locally, it removes the expensive, pay-per-call API fees associated with larger models, saving significant money at scale.

Private by Default: Since all processing happens on your own device, no sensitive visual data is sent to a third-party cloud server.

Good Performance for Size: For its small size, Moondream 3 performs well on tasks like image captioning, VQA, and OCR.

Flexible and General-Purpose: It can replace multiple specialized computer vision models with a single VLM that is controlled with text prompts.

Available Cloud API: For those who prefer not to host it themselves, the Moondream Cloud offers a free tier of 5,000 requests per day.

Not for Real-Time Speed: Its processing time per image (several seconds on a Raspberry Pi) makes it unsuited for applications needing instant feedback, like live video object tracking.

Limited Tooling Support: As a newer model, it lacks native support for popular frameworks like llama.cpp or MLX, which can complicate integration.

Struggles with Fine Print: The vision encoder can have difficulty accurately performing OCR on very small or densely packed text within an image.

Requires Technical Skill: This is a tool for developers. It requires comfort with Python and command-line interfaces; it is not an application for non-technical users.

-

Lightweight MoE Architecture: Moondream 3 uses a 9B parameter Mixture-of-Experts model with only 2B active parameters, keeping it fast and resource-efficient.

-

Visual Question Answering (VQA): Ask questions about an image in natural language and receive a direct textual answer.

-

Image Captioning: Automatically generate concise descriptions for any given image.

-

Object Detection and Pointing: Identify and locate specific objects within an image based on a text prompt.

-

Optical Character Recognition (OCR): Extract and read text found within images.

-

Gaze Detection: Identify the direction a person in an image is looking.

-

Structured Data Output: Direct the model to format its output in JSON or Markdown for easy parsing.

-

Multi-Image Reasoning: Analyze and answer questions based on a sequence of multiple images.

-

Local and Cloud Deployment: Can be run offline on your hardware or accessed via a cloud API.

-

Apache 2.0 License: Permits unrestricted commercial use, modification, and distribution.

Moondream 3 Homepage

Moondream 3 Homepage

Vision AI Techology

Vision AI Techology

Frequently Asked Questions

-

How does vision AI work?

Vision AI works by using neural networks to process visual data like images or videos. These networks are trained on large datasets of labeled images to recognize patterns, objects, and features. After training, the AI can analyze new images to perform tasks like identifying objects or describing a scene. -

What are vision AI models?

Vision AI models are specialized artificial intelligence programs designed to interpret and understand visual information. They are the result of the training process and can perform specific tasks such as classifying images, detecting objects within a frame, or reading text from a picture. -

What are visual language models?

A visual language model (VLM) combines the capabilities of a vision AI model with a language model. It can process an image to understand its content and then use language to describe that image, answer questions about it, or follow text-based instructions related to the visual information. Moondream 3 is an example of a visual language model. -

What is Moondream 3?

Moondream 3 is a small, open-source vision language model that understands images and answers questions about them. -

How much does Moondream cost?

Moondream 3 is free to download and run on your own hardware, and its cloud API includes a free tier with 5,000 daily requests. -

Can Moondream run on a Raspberry Pi?

Yes, Moondream 3 is specifically designed to be efficient enough to run on low-power devices like a Raspberry Pi (8GB RAM recommended). -

Can I use Moondream for a commercial project?

Yes, the model is licensed under Apache 2.0, which allows for commercial use.

Tech Pilot’s Verdict on Moondream 3

I have been following the development of small, efficient AI models, as they are a practical alternative to the “bigger is better” approach. With Moondream 3, we wanted to see if a tiny, open-source vision model could work for applications on edge devices. Our goal was to test its capabilities in scenarios where privacy and cost are primary factors, moving beyond benchmarks to assess real-world function.

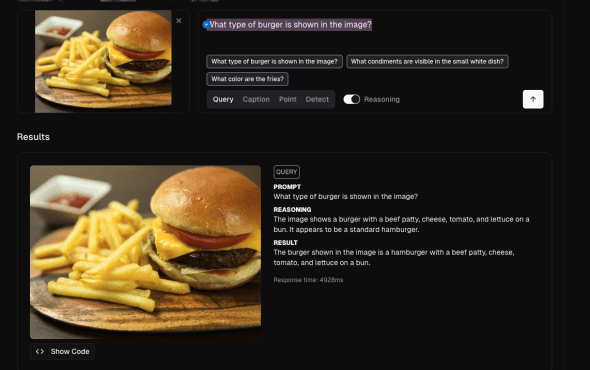

Instead of a complex hardware setup, my first stop was the official Moondream 3 Playground, which allows anyone to test the model directly in their browser. I uploaded a picture of “a burger” and then asked “What type of burger is shown in the image?” produced an accurate “The burger shown in the image is a hamburger with a beef patty, cheese, tomato, and lettuce on a bun.” in about 9 seconds. It correctly identified other objects and read the time on a digital clock. The performance was notable for such low-power hardware. The delay confirms that it’s a tool for non-urgent analysis, not real-time alerts.

Next, we tested its use for UI automation. We gave it screenshots of a web application and asked it to identify elements. It located buttons and icons based on descriptions like “the blue login button.” This is valuable for creating more dependable automation scripts. The model functions as the “eyes” of the operation; you still need to integrate it with a driver like Selenium to perform clicks. This type of vision ai tech can also be classified as a visual language action model in this context, as it interprets visual information and prompts an action.

Top Alternatives to Moondream 3

-

LLaVA: LLaVA is an open-source model that targets high performance on academic benchmarks. It is generally larger and requires more powerful hardware than Moondream 3. You should choose LLaVA when your main goal is achieving the highest accuracy on general visual tasks and you have a dedicated GPU.

-

Qwen-VL: From Alibaba Cloud, Qwen-VL is proficient at tasks needing high precision, such as generating exact bounding boxes for objects. It is more feature-rich than Moondream 3 for these specific uses but is also more complex. Qwen-VL is a better choice if your application involves chart interpretation or precise object localization.

-

SmolVLM: This model is a direct competitor in the tiny VLM category. It uses specific techniques to minimize memory and token usage, making it even more lightweight than Moondream 3. If your application runs in a highly resource-constrained environment, SmolVLM might have an advantage in speed and memory footprint.

Final Assessment

After this evaluation, we find that Moondream 3 is a highly functional tool. It is not designed to be the most powerful vision model available, but rather one of the most accessible. Its utility comes from its constraints. Because it is small, it can run anywhere. Because it runs locally, it maintains privacy. And because it is open-source, it is free.

There is a learning curve, as this is a tool for builders. But for any developer looking to add visual intelligence to a project without a constant cloud connection, this tool is a credible option and a standard for its category. It is a suitable choice for home automation projects, robotics prototyping, and applications where cost and local processing are priorities.