Design Arena: Benchmarking AI to Find the Actual Best Tool

Design Arena is a web platform that appraises AI-generated designs through crowdsourced human preference testing. Founded in 2025 by Harvard graduates Grace Li, Kamryn Ohly, and Jayden Personnat, the tool came from the Y Combinator Summer 2025 batch and gained notice by attracting over 47,000 users in its first month.

Design Arena addresses a specific need in the AI field: the lack of a standard method for measuring the aesthetic and functional quality of AI-generated visuals. While many AI models can generate code and images, they often miss a basic sense of design appeal and usability, sometimes producing flawed outputs like white text on a white background.

The platform is made for developers needing front-end code and for designers looking to compare design variants. Its main function is to replace slow, subjective manual design reviews with a quick, data-supported, and interactive process. By using a head-to-head voting system, Design Arena provides a public generative AI ranking, pushing the industry to improve the quality of AI-generated design and offering a clear standard for performance. This function is a core part of its AI model benchmarking capabilities.

Best Use Case for Design Arena

- AI/ML Engineers: For teams developing generative models, Design Arena offers a direct way to measure their model’s performance against others. They can use the private evaluation feature to track improvements between versions, using real-world human preference data to find weaknesses and refine their algorithms for better visual and functional outputs.

- UI/UX Designers: A designer creating multiple concepts for a new website can use the platform to quickly generate variants from different AI tools. By submitting these designs for votes, they can collect thousands of user opinions much faster than with traditional focus groups, letting them concentrate on improving the highest-rated concepts.

- Software Developers: A solo developer or a small team without a designer needs a working and appealing user interface. They can use this platform to test prompts across various AI models, using the platform’s leaderboard and voting data to choose the most effective UI components. This approach to generative AI benchmark ranking saves considerable time.

- Product Managers: When deciding which AI design tool to use in a company’s workflow, a Product Manager can consult the public leaderboards on the platform as a source of objective data. This helps them make a reasoned decision based on which models consistently produce high-quality designs for specific tasks.

Data-Supported Benchmarking: Uses a quantitative Elo rating system to provide an objective score for the subjective field of design.

Quick Feedback Loop: Collects thousands of user preferences on design variants much faster than traditional A/B testing.

Industry-Wide Transparency: Public leaderboards create an accessible standard, encouraging competition and development among AI model creators.

Simple and Engaging Interface: The "this-or-that" voting system is direct and holds user attention, making it easy to collect a large volume of preference data.

Addresses a Core AI Problem: Directly confronts the issue of "AI taste," pushing models beyond technical correctness to achieve aesthetic appeal.

Private Evaluation for Teams: Offers a valuable service for companies to confidentially test and track the performance of their own models.

Potential for Subjectivity: Crowdsourced votes can be swayed by personal taste rather than objective design principles.

Prompt Ambiguity: Voters may not know the original prompt, causing them to favor a prettier design over one that better fulfilled the request.

Limited Integrations: As a new platform, it does not yet have direct integrations with major design software or development environments.

New and Still Developing: Having launched recently, the long-term dependability of its ranking system is still being established.

-

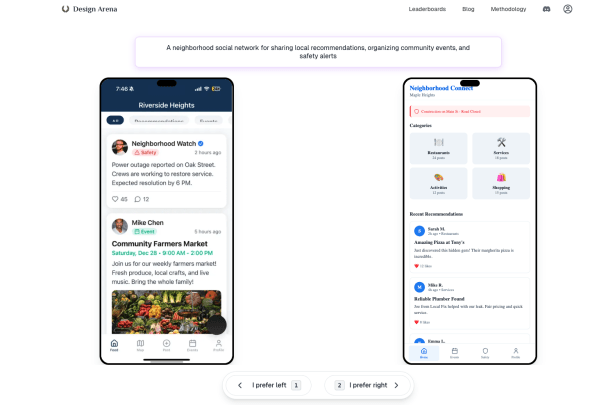

Head-to-Head Voting: The main function where users are shown two AI-generated designs and vote for their preference.

-

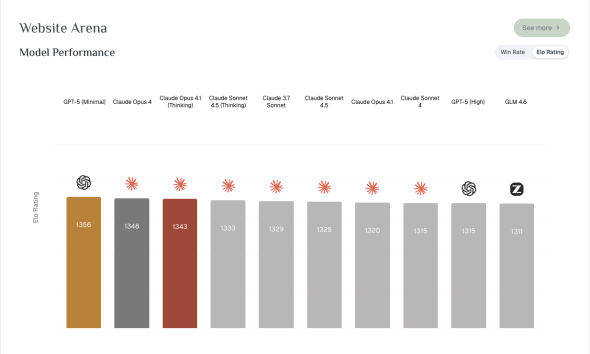

Elo Ranking System: An algorithm that scores and ranks AI models based on the results of their design comparisons.

-

Public Leaderboards: A frequently updated generative AI ranking of the best-performing AI models for various design tasks.

-

Private Evaluations: A B2B service letting companies use the platform’s structure for internal version testing of their models.

-

Multi-Modal Support: Appraises a range of AI-generated content, including front-end UI, images, video, and audio.

-

Methodology Transparency: The platform provides details on the system prompts and methods used to generate the designs.

-

Interactive User Experience: The simple, click-based voting system makes participation easy and appealing for a wide audience.

-

Crowdsourced Data: Uses a large user base from over 136 countries to collect a wide range of preference data.

AI Model Benchmarking

AI Model Benchmarking

AI Comparison

AI Comparison

Frequently Asked Questions

-

What is Design Arena?

Design Arena is a web platform that provides ai model benchmarking for AI-generated designs using a crowdsourced voting system. -

How does Design Arena rank AI models?

It uses an Elo rating system, where models gain or lose points based on user votes in head-to-head comparisons. -

Who is the target audience for Design Arena?

The platform targets developers needing UI components and designers creating design variants. -

Is Design Arena free to use?

The public voting and leaderboards are free; the company offers paid private evaluations for businesses. -

What kind of designs does it evaluate?

It evaluates front-end UI, images, video, and audio made by AI models. -

Which AI tool is best for beginners?

For beginners focused on design, user-friendly tools like Midjourney for images or various prompt-based UI generators are excellent starting points. The “best” tool depends on the user’s specific goal, and platforms like Design Arena help beginners see which models produce high-quality results for common tasks. -

Which AI tools should I learn first?

Beginners should first learn prompt-based generative AI tools because they do not require coding knowledge. Starting with a popular and high-performing image generator helps teach the fundamentals of prompting, which is a core skill for using most modern AI applications. -

How do I start learning AI for beginners?

Beginners can start learning AI by using accessible tools to understand their capabilities and limitations. Practical first steps include practicing how to write effective prompts and using evaluation platforms like Design Arena to analyze and compare results. This hands-on approach is effective for learning to apply AI.

Tech Pilot’s Verdict on Design Arena

I have observed the AI field for years, and while models get technically more capable, they often lack good taste. This is why Design Arena stood out to me. It is not another AI that generates content; it is an arena where AI-generated designs are judged by human approval. For these ai tool reviews, my goal was to see if this “this-or-that” system for AI could genuinely address the design quality problem.

First, I used the public platform. The experience is polished and immediately clear. You are presented with two website headers or two icons, and you click on the one you prefer. It is compelling. After about 30 votes, I checked the leaderboards. Seeing models like Midjourney and DALL-E 3 ranked by user preference felt like a significant development. It is one thing to say a model is powerful; it is another to show it can create something people actually like.

The real function here is not just for the casual user. I pictured myself as a developer with a strict deadline. Instead of struggling with CSS, I could consult the platform’s leaderboard to see which AI model produces the best “sign-up form with a minimalist aesthetic.” This changes the platform from a simple website into a useful decision-making tool. It provides data to guide creative work, removing some of the guesswork in using generative AI.

However, the platform has its limitations. The main issue is the subjective nature of design. Is the crowd always right? A popular design might not be the most effective for a specific brand. There is also the matter of context. Without knowing the original prompt, am I voting for the most attractive image or the one that best followed instructions? This is a detail the platform will need to handle as it grows.

Top Alternatives to Design Arena

-

Manual A/B Testing: This traditional method involves creating design variants and testing them with a target audience. Manual A/B testing gives specific, context-rich feedback from your actual users. However, it is much slower and more expensive than the rapid feedback from Design Arena. It is a better choice for validating a final design with your customer segment, whereas this tool is better for broad, early-stage concept testing.

-

Arena Platforms for LLMs (e.g., LMSys Chatbot Arena): These platforms are conceptually similar to Design Arena, but for language models. They use the same Elo-based, head-to-head voting system to rank chatbots. Their strength is their proven success in the ai model benchmarking of text-based AI. Their clear weakness is that they are not suited for visual design evaluation.

-

Integrated Evaluation Tools: Some larger AI development platforms are starting to include internal “arena” modes for model comparison. These tools are useful because they are built into the developer’s workflow. Their weakness is that they do not have the scale or diversity of a public, crowdsourced platform like Design Arena. They are better for quick, internal checks, while this platform is superior for comprehensive, public-facing generative ai benchmark ranking.

In conclusion, Design Arena is a straightforward approach to a complex problem. It has created a clear, data-supported benchmark for the subjective field of AI design. While it faces issues related to subjectivity, its utility for developers, designers, and the AI industry is apparent. It is a necessary step forward in the development of creative AI.