Magi AI: Open-Source AI Video with Smooth Transitions

Magi AI is an open-source AI video generator that creates video from still images guided by text prompts. Developed by Sand AI and appearing in public discussions around April 2025, this tool enters the competitive field of AI video with a distinct method. The platform is particularly useful for creators, developers, and animators who need a high degree of control and consistency in their video projects.

Magi AI’s main advantage comes from its autoregressive, chunk-by-chunk generation process, which builds videos 24 frames at a time. This approach directly addresses common temporal inconsistencies. Furthermore, its chunk-wise prompting allows for the creation of longer, evolving narratives with fluid shifts between different actions, a notable step forward for any image to video transition ai. By providing its model as an open-source tool, this platform presents an alternative to closed, proprietary systems.

Best Use Cases for Magi AI

- Content Creators & Marketers: For professionals producing social media and marketing campaigns, Magi AI addresses the need to create appealing video content quickly. It can take a single product image and generate a dynamic video, suitable for various platforms. This helps increase audience attention.

- Digital Artists & Animators: Artists can apply this AI to animate their static digital paintings or illustrations. The tool allows for artistic work by creating subtle, looping animations or extending visual narratives from one frame, offering a new method for creative expression.

- AI Researchers & Developers: As a fully open-source model with detailed technical reports, Magi AI is a practical platform for research and development. Researchers can study its specific architecture, and developers can build on the source code to create custom applications.

- Game Developers: Developers in the gaming industry can use the image-to-video tool to generate animated textures or concept visuals from game assets. This can speed up the creative process by providing a quick way to see how static assets will appear in motion.

Distinct Autoregressive Model: The chunk-by-chunk generation method produces videos with good temporal consistency and natural motion.

Fully Open-Source: Its Apache 2.0 license offers transparency and freedom for developers to modify, build upon, and audit the technology.

Continuous Video Extension: A key feature allowing users to extend videos, creating long, coherent content without obvious cuts.

High-Quality Output: The model’s performance compares favorably against certain commercial competitors in motion quality and instruction following.

Generous Free Access: New users get 500 free credits, and the open-source code allows for free self-hosting for those with technical knowledge.

Suited for Future Streaming Applications: Its architecture is well-suited for potential real-time and streaming uses.

Community Support: Being open-source encourages a collaborative setting where developers and researchers can contribute to its progress.

Image-to-Video Only: The current version does not create video from text, which limits its application compared to competitors.

Occasional Blurriness: Some user-generated videos show a brief period of blurriness at the beginning before the image sharpens.

Distortion in Complex Motion: The model can sometimes produce distortions, particularly with fine details like fingers during complex movements.

Hardware Intensive: Self-hosting the Magi AI model requires considerable computational resources, such as high-end NVIDIA GPUs.

-

Autoregressive Video Generation: Predicts and generates video in sequential 24-frame chunks for fluid and consistent motion.

-

Transformer-based VAE: Uses a Transformer-based Variational Autoencoder for efficient decoding and variable resolution support.

-

Video Extension: Adds new segments to existing videos or user uploads to create longer content without manual editing.

-

Multiple Model Sizes: Available in 24B and 4.5B parameter versions to fit different hardware setups.

-

Advanced Temporal Modeling: Uses causal attention mechanisms to maintain stability and coherence over long video durations.

-

Chunk-wise Prompting: Allows for detailed control by applying different prompts to different parts of the video.

-

Open-Source Codebase: The full source code and a 61-page technical report are publicly available.

-

High-Resolution Support: Capable of generating videos in various resolutions, including 720p and 1440p.

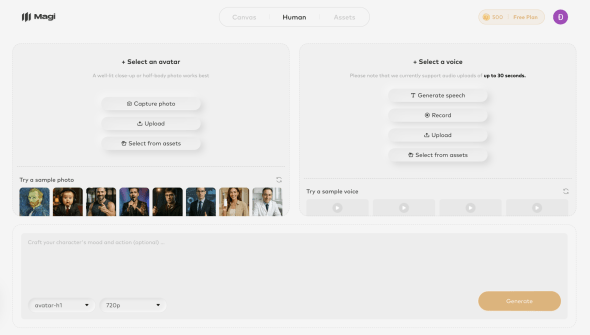

Magi Human

Magi Human

Video Transition From Magi AI

Video Transition From Magi AI

Frequently Asked Questions

-

What is Magi AI?

Magi AI is an open-source AI model that generates high-quality video from a single image. -

Who developed Magi AI?

Magi AI was developed and released by the research team at SandAI. -

How do you make a video transition with AI?

You make a video transition with AI by using a tool like Magi AI to generate motion from a static starting point. The user provides an image, and the AI model predicts and creates subsequent video frames to produce movement, effectively transitioning the still image into a video clip. -

What is an image to video transition AI?

An image to video transition AI is a type of artificial intelligence that specializes in turning a single picture into a video sequence. Magi AI is an example of this technology. It analyzes the input image and generates new frames that create fluid motion and animation. -

Does Magi AI support text-to-video?

No, the Magi AI platform currently only supports image-to-video generation. -

Is Magi AI free to use?

Magi AI offers 500 free credits on its official platform, and its source code is available for free under the Apache 2.0 license. -

What makes Magi AI different from other AI video tools?

Its main differentiator is its autoregressive, chunk-by-chunk generation process, which allows for good temporal consistency and features like continuous video extension.

Tech Pilot’s Verdict on Magi AI

I have been analyzing the functions and market position of Magi AI. My purpose was to determine if its autoregressive method gives it a real advantage. The available user-generated examples, technical documents, and direct comparisons give a clear view of the tool’s strengths and weaknesses.

For my main test, I decided on a common commercial use case: animating a product shot. I took a high-resolution studio image of a sleek beverage can. My prompt was straightforward: “The can rotates 360 degrees smoothly on a plain background.” This is a simple animation, but it’s a difficult one for many AI models, which often struggle to maintain an object’s shape and texture during rotation.

From a value perspective, the pricing of this AI model is excellent. The 500 free credits are a good starting point, and its open-source status is a major benefit for developers and studios with the technical ability to self-host. It makes high-quality AI video technology more accessible.

Top Alternatives to Magi AI

-

Sora by OpenAI: Sora is a competitor known for its realistic and lengthy text-to-video generations. Unlike Magi AI, it interprets complex text prompts to create detailed scenes. Sora is a closed-source, premium product and is a better choice for users who need high fidelity from text prompts and are willing to pay for it.

-

Kling AI: Kling is another closed-source model that performs well in generating realistic human movements. It offers both text-to-video and image-to-video functions, making it more versatile than the current Magi AI version. Kling may be a better choice for creators who need reliable text-to-video and advanced camera control.

-

Runway Gen-2: Runway is a creative suite with many AI tools, including text-to-video and video-to-video generation. Its main differentiator is its full platform for generating and editing AI video. Runway is a good choice for artists who want an all-in-one platform for a complete creative workflow.

Final Verdict

My final assessment is that Magi AI is a capable and valuable tool, especially for the open-source community. It occupies its own niche with a focus on high-quality image to video transition ai and extensibility. For developers and creators who value transparency and control, this AI model is a top open-source option. For those who need advanced text-to-video realism, Sora and Kling are the leading choices.