Claude AI – The responsible Chat Bot built to reduce harmless outputs

Anthropic’s Quest to Redefine AI Safety with Claude AI

Amidst the hype around conversational AI, Anthropic has emerged as an intriguing new player with a different approach. Founded in 2021 by former OpenAI researchers, Anthropic aims to pave the way for safe artificial general intelligence that benefits humanity. The San Francisco-based startup recently unveiled its first product – an AI assistant called Claude.

Claude promises natural dialog abilities that set it apart from predecessors, while avoiding the harms of unethical recommendations or false facts. This article takes a deep dive into Anthropic’s origin story, the unique techniques powering Claude, and what the future may hold as this model tackles generative AI’s hardest problems.

The Genesis of Anthropic: Mission-Driven Since Day One

Anthropic was co-founded by Dario Amodei, Daniela Amodei, Tom Brown, and Jared Kaplan – all leading AI researchers previously at OpenAI. The four founders split off from OpenAI in 2021 over differing views on AI safety, taking along other researchers sympathetic to their mission.

The name “Anthropic” refers to the philosophical principle of being human-friendly. From early on, the company has been mission-driven – aspiring to shape AI that is safe, beneficial, and honest. Anthropic has raised over $200 million from top Silicon Valley investors like Dustin Moskovitz, enabling it to grow rapidly.

At its core, Anthropic AI aims to solve the formidable technical challenges involved in training Artificial Intelligence models that behave reliably. This requires imparting human ethics and values, so AIs make decisions aligned with the greater good rather than mindlessly optimizing incorrect objectives. Anthropic refers to its safety approach as “Constitutional AI” – crafting models constrained by principles to act helpfully.

Crafting Carefully-Curated Knowledge with Safety in Mind

Anthropic trained Claude AI differently from LLMs like ChatGPT that ingest unfathomable amounts of online data. While these models gain broad knowledge, they also pick up harmful biases and misinformation from the internet.

Instead, Claude’s training data came from human-human conversations. Anthropic staff provided demonstrations and conversations covering topics like science, technology, and everyday life. This allowed more control over the knowledge inputs. Anthropic also carefully avoided sensitive current events, political issues, or personal user data that could skew results.

Claude’s responses pass through a series of censorship classifiers that filter out toxic language, hate speech, or unethical recommendations. As an additional safeguard, Claude will refrain from answering dangerous questions it should not comment on. This strict curation allowed Anthropic to impart common sense, wisdom and helpfulness while reducing risks.

Claude AI Chat: Focused on Natural Conversation

Anthropic designed Claude AI using self-supervised learning methods like Constitutional Artificial Intelligence. The architecture focuses less on dazzling creative potential and more on ability to sustain coherent, harmless conversations.

In demos, Claude AI chat smoothly answers follow-up questions and gracefully transitions between topics. Anthropic claims Claude can converse 50% longer than alternatives like Google’s LaMDA before repetition or confusion. This results from techniques that provide stronger conversational memory and a consistent persona.

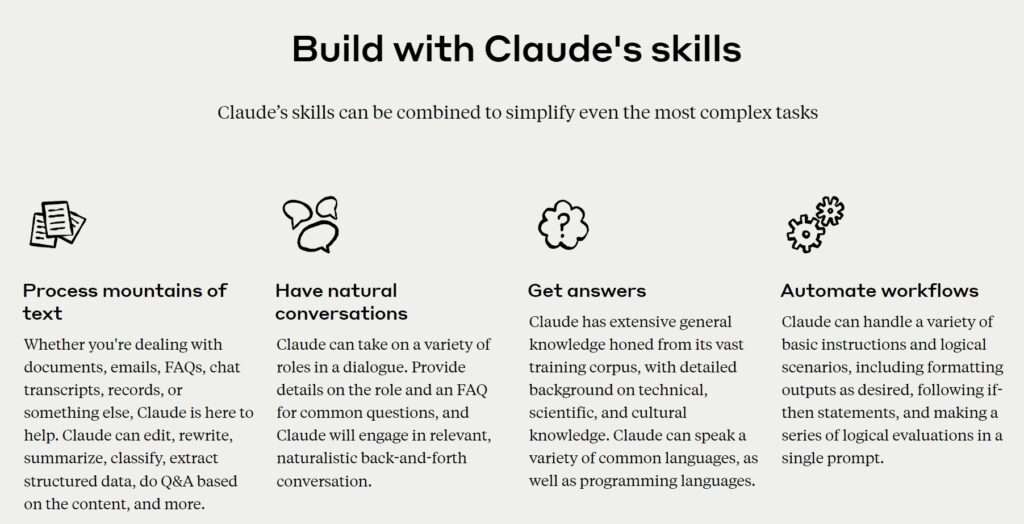

Claude will be available via API, allowing integration into chatbots, digital assistants, customer service platforms, and more. Partners can tap into its advanced natural language capabilities for their own applications. Anthropic aims to make Claude widely accessible to developers pursuing safe implementations of generative AI.

Early Reactions: Cautious Optimism

Anthropic has provided demos of Claude to select groups of journalists, AI experts and potential customers. Initial reactions express cautious optimism. Claude appears highly competent at harmless chit-chat within its knowledge domain.

Its voice strikes observers as natural and engaging. Claude avoids concerning issues like toxic language or blatantly incorrect information that occurred during benchmarks of alternatives. However, Claude remains unproven for handling sensitive current events. Some observers question whether dangerous responses have simply been suppressed rather than structurally prevented.

Nonetheless, early verdicts deem Claude a promising step forward in conversational AI. The focus on safety-oriented design principles distinguishes Anthropic’s approach in a domain riddled with pitfalls. Whether CLAUDE fulfills its promise will become clearer when opened to broader testing.

The Road Ahead: Scaling Responsibly with Claude Anthropic AI

Looking ahead, Anthropic plans to begin charging companies to access Claude through custom APIs and developer tools. Initial applications could include conversational bots for tasks like tutoring, customer service, and entertainment. Anthropic may also partner with big tech firms to license its Constitutional AI framework.

However, the startup seems committed to scaling Claude responsibly before aggressive monetization. Out-of-control viral adoption like ChatGPT’s carries different dangers than slow incubation with trusted testers. Tensions may arise around responsible open access versus profit motives.

There are also technical unknowns about how Claude will perform outside limited domains. And Constitutional AI remains unproven for handling harmful content at massive scale. But Anthropic’s principled approach puts safety first in ways colleagues like OpenAI have deprioritized. The coming months will reveal strengths and limitations.

Above all, Anthropic aspires to lead in safely unlocking AGI’s life-changing potential while avoiding existential catastrophe – perhaps AI’s ultimate balancing act. Its founders acknowledge they do not have all solutions yet. But by questioning status quo methods, Anthropic offers a insights distinct from Big Tech orthodoxy.

Claude AI kicked off anthropic’s coming-out party to the Artificial Intelligence community. Regardless of whether Claude 2 AI itself becomes a hit product, Anthropic’s entrance adds a refreshing new voice arguing AI must align with human values, not mercenary objectives. If Anthropic makes serious progress on key safety challenges, we could look back on it as a turning point for responsible AI done right. The stakes could not be higher, but with its principled approach Anthropic offers hope for a brighter future.

- What differentiates Anthropic’s approach to training generative AI models? Anthropic uses human-curated datasets focused on beneficial content. Models like Claude also apply censorship classifiers to filter out harmful outputs.

- What is Constitutional AI and how does Anthropic use it? It’s Anthropic’s framework to align AI with human values through techniques like supervision and safer objective functions. This powers Claude.

- What applications is Anthropic targeting initially for its Claude assistant? Claude is well-suited for conversational bots in domains like customer service, tutoring, and interactive entertainment.

- Does Anthropic plan to offer Claude capabilities to other companies? Yes, through APIs and potential partnerships. But a slow, careful rollout is planned rather than viral consumer launch.

- What are the main risks if AI like Claude is scaled irresponsibly? Harms could include embedding biases, radicalizing users, spreading misinformation, or enabling malicious actors.