A Guide to AI Agents Transparency: Managing the “Black Box” Problem

What is the “Black Box” Problem in AI Agents?

The “black box” problem in AI agents is the challenge of understanding why an autonomous system makes a specific decision or takes a particular action. Achieving AI agents transparency is the practice of implementing systems and methods to make their internal reasoning processes as interpretable as possible. This is a critical component of building safe, trustworthy, and effective autonomous systems.

This challenge is not just academic; it is a fundamental barrier to the widespread adoption of agentic AI in high-stakes environments due to difficult governance implementation. When an agent can execute financial trades, manage customer data, or interact with other business-critical systems, a lack of transparency is not just a flaw—it’s a significant operational risk. Effective management of this issue is central to AI agent accountability.

Key Takeaways

- The “black box” problem in AI agents is about understanding their decision-making process, not just their text output.

- Analyzing an agent’s “trace” or decision log is the most effective way to debug its behavior and understand its reasoning.

- Explainable AI (XAI) techniques provide valuable insights into agent decisions but do not make the black box completely transparent.

- Designing agents with modularity and using simpler models for critical tasks can inherently improve transparency and reduce risk.

- Human-in-the-loop (HITL) workflows, where a person approves critical actions, remain the most reliable strategy for ensuring AI agent accountability.

It’s crucial to distinguish between the black box problem in a standard Large Language Model (LLM) and in an AI agent.

- LLM Black Box: The mystery is in content generation. We don’t fully know why the model chose a specific sequence of words to form a sentence.

- Agent Black Box: The mystery is in decision-making. We don’t fully know why the agent chose to take a specific action (like calling a tool or sending an email) instead of another. The focus shifts from interpreting words to interpreting AI agent decisions.

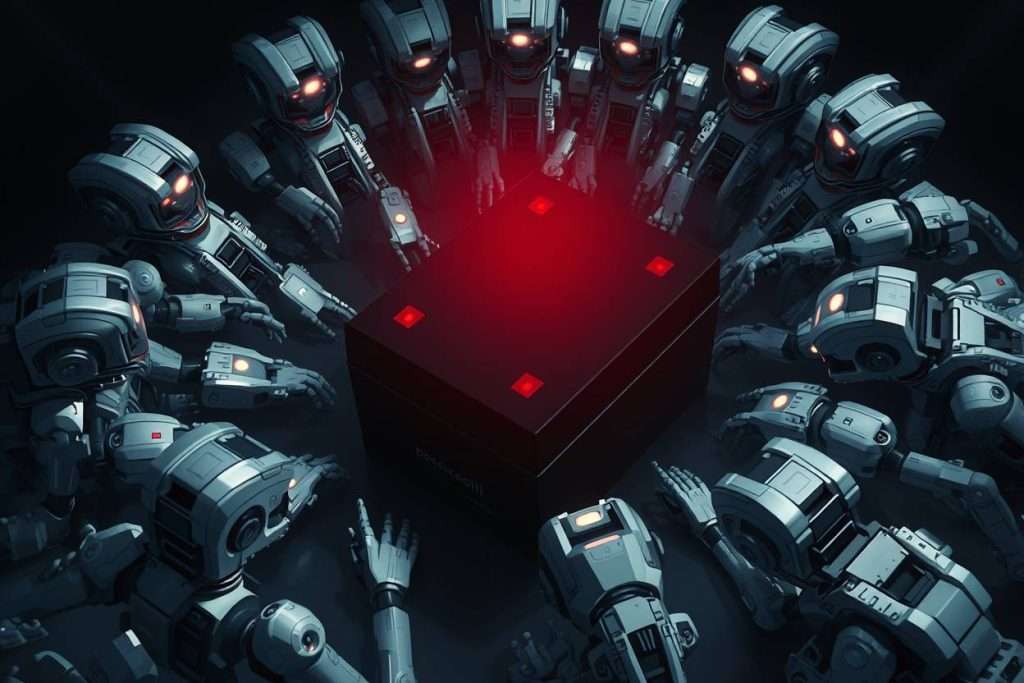

The black box problem is magnified in autonomous systems because of the potential for cascading consequences.

- Cascading Failures: One unexplained bad decision can trigger a series of harmful automated actions, turning a small error into a major incident.

- Impossibility of Accountability: If you don’t know why an agent acted, you cannot assign responsibility, fix the root cause, or prevent it from happening again. This makes auditing AI agents nearly impossible.

- Erosion of Trust: Users and operators will not—and should not—rely on systems whose reasoning they cannot understand, especially when the stakes are high.

How Can We “Peer Inside the Black Box”? The Power of Observability

The most fundamental and practical technique for increasing AI agents transparency is observability. This means having the ability to monitor and understand the agent’s internal state and reasoning process at every step.

An “agent trace” is a detailed, step-by-step log of the agent’s internal “thought process.” It is the primary tool for auditing AI agents and understanding their behavior. A good trace captures the key components of the agent’s reasoning loop:

- The agent’s overall plan to achieve its goal.

- The specific tools it chose to use at each step.

- The exact inputs it provided to those tools.

- The outputs it received back from the tools.

The trace allows you to move beyond the failed final output and pinpoint the exact step where the agent’s logic went wrong. By reviewing these AI agent decision logs, developers can identify if the failure was caused by a flawed plan, incorrect tool usage, or an external error. This is the foundation of practical AI agent accountability.

What are Practical Techniques for Explaining Agent Decisions? (Explainable AI – XAI)

Explainable AI (XAI) is a set of methods designed to make AI decisions more interpretable. For agentic systems, several techniques are particularly useful for creating explainable AI agents.

This is a simple yet powerful technique where you explicitly instruct the agent to state its reasoning before it acts. By adding a rule to the agent’s prompt like, “Before using any tool, explain your reasoning, your plan, and the tool you will use,” you force it to generate a natural-language explanation of its intent, which is then captured in the agent trace.

- LIME is a popular XAI technique for interpreting AI agent decisions one at a time. It works by creating a simpler, temporary “explainer” model around a single, specific decision made by the complex agent. This allows you to understand which factors were most influential for that particular instance, without trying to understand the entire complex model at once.

- SHAP is another powerful method for creating explainable AI agents. It assigns an “importance value” to each input feature that contributed to a decision. For example, it could show that for a loan application agent, an applicant’s credit score contributed +0.5 to the “approve” decision, while their debt-to-income ratio contributed -0.3. This provides a more quantitative view of the decision-making process.

How Can You Design Agents for Transparency from the Start?

Achieving AI agents transparency is not just about after-the-fact analysis; it’s also about making smart architectural choices during the design phase.

It is a well-known fact in machine learning that the most powerful models are often the most opaque. When designing an agent, you must consider this trade-off. For critical sub-tasks where explainability is paramount, it might be better to use a simpler, more transparent model (like a decision tree or a linear regression model) instead of a massive neural network.

Instead of building a single, monolithic agent to do everything, a better approach is to design a team of smaller, single-purpose agents. The behavior of these smaller, specialized agents is far easier to test, validate, and understand. This modular design is a key strategy for building more manageable and explainable AI agents.

What is the Role of the “Human-in-the-Loop” in Managing Black Box Risk?

The most reliable, non-technical strategy for managing the risks of opaque AI is ensuring meaningful human oversight.

No matter how complex or opaque an agent becomes, a final point of human judgment can prevent harmful actions from being executed. This is the cornerstone of responsible AI implementation and is essential for real-world AI agent accountability.

A common and effective design pattern is the “review and approve” workflow. In this system, the agent can perform all the necessary research, analysis, and planning, but it must present its proposed action to a human operator for final approval before it is allowed to execute it. This is a practical way of auditing AI agents in real time.

For a human-in-the-loop system to work, the interface must be effective. A good dashboard should clearly visualize the agent’s proposed plan, the key data it used to arrive at that plan, and its confidence score. This allows the human operator to make a fast and informed decision about whether to approve or reject the agent’s proposed action.

What are common misconceptions about the Black Box Problem?

To properly manage this challenge, we must first dispel some common myths.

The reality is that XAI techniques provide valuable insights and approximations of an agent’s reasoning. They do not provide a perfect, deterministic explanation of the complex inner workings of a neural network. They make the black box less opaque, but they do not eliminate it entirely.

The reality is that for the most powerful deep learning models, a degree of opacity is an inherent trade-off for their high performance. The goal of AI agents transparency is to manage this opacity through observability, explainability, and oversight, not necessarily to erase it completely.

Conclusion: From Absolute Control to Informed Trust

The tools of the past were fully understood and directly controlled; we knew every rule in the machine. The AI agents of the future are different. They are partners whose internal reasoning may never be fully transparent to us. Managing the “black box” problem and improving AI agents transparency, therefore, is not just a technical challenge—it is a philosophical one.

It marks a fundamental shift in our relationship with technology, moving away from a need for absolute control and toward the necessity of building systems that, while not fully understood, can earn our informed trust through robust processes for observability, accountability, and oversight.