Wan AI: Powerful & Free AI Video Creation

Wan AI is a creative platform from Alibaba that generates video and images from text and image prompts. Developed by Alibaba’s Institute for Intelligent Computing, this open-source model makes high-quality content creation more accessible.

Wan AI is particularly useful for content creators, marketers, developers, and AI researchers who need to produce visual content efficiently. Its main appeal is offering high-grade video generation without the costs or restrictive licenses of many commercial competitors. This model alters traditional video production by lowering the time, technical skill, and budget required to create visual narratives. It moves powerful artificial intelligence from closed, paid platforms into a global open-source community. The platform’s Text-to-video AI and Image to video AI functions are central to its operation.

Best Use Cases of Wan AI

- Independent Filmmakers & Animators: An indie creator can visualize complex scenes or create entire animated shorts without a large budget. For example, they can use the Text-to-video AIfunction of Wan AI to generate a clip of a “cyberpunk city in a neon-lit rain.” They can also turn a character sketch into a short, animated clip to test movement, solving the problem of high pre-production costs.

- Marketing Professionals: A marketing manager can quickly create video assets for social media campaigns. Instead of organizing a costly video shoot, they can use Wan AI to generate a dynamic video from a single product image. This allows for the creation of multiple ad variations from text prompts to A/B test different concepts with minimal expense.

- Educators & eLearning Developers: An instructional designer can convert static educational materials into engaging video lessons. Using the Image to video AI feature, they can turn a diagram of the solar system into an animated sequence or generate a historical scene from a text description. This helps make learning content more dynamic for students.

- Game Developers & Designers: A game developer can rapidly prototype in-game cutscenes or environmental effects. A developer can use Wan AI to visualize a magical spell from a game’s design document or create a moving background for a level from concept art. This method accelerates the creative workflow and iteration process.

Completely Free & Open-Source: Wan AI is freely available for both commercial and research use, eliminating the subscription costs associated with its main competitors.

Runs on Consumer Hardware: The model is optimized to run on consumer-grade GPUs like the NVIDIA 4090, making it accessible without requiring expensive cloud computing resources.

High-Quality Cinematic Output: It is trained on curated aesthetic data, allowing it to produce videos with impressive cinematic control over lighting, composition, and motion.

Uncensored Creative Freedom: Unlike many commercial tools, Wan AI has fewer content restrictions, providing greater flexibility for artists and creators to generate a wide range of concepts.

Strong Image-to-Video Capability: The I2V feature excels at creating videos with stable and natural motion while maintaining high fidelity to the source image.

Active Development: Backed by a major tech company like Alibaba, the model benefits from continuous research and significant upgrades, such as the recent Wan 2.2 release.

Technical Setup Required: Running the model locally requires a powerful computer and familiarity with environments like Pinokio or ComfyUI, which can be a barrier for non-technical users.

Potential for Long Wait Times: Public demos on platforms like Hugging Face can experience long queues due to high demand.

Steeper Learning Curve: Without a polished commercial user interface, new users may face a steeper learning curve to master prompt engineering and achieve desired results.

Quality Can Vary: While capable of stunning results, the output quality is not always as consistent or polished as the top-tier results from closed models like OpenAI's Sora.

-

Text-to-Video AI (T2V): Generates high-definition video clips (720p at 24fps) directly from descriptive text prompts.

-

Image to Video AI (I2V): Animates still images, creating video content that preserves the character, style, and composition of the original picture.

-

Mixture-of-Experts (MoE) Architecture: Uses an advanced model structure that increases capability while keeping computational demands relatively low.

-

Cinematic Style Control: The model understands and applies cinematic concepts like specific lighting and camera angles based on text prompts.

-

Open-Source License: Allows anyone to download, modify, and integrate the Wan AI model into their own applications for free.

-

Efficient Performance: Built to run effectively on single consumer-grade GPUs, making powerful AI video tools more available.

-

Text-to-Image Generation: Includes a capable T2I model for creating photorealistic and stylized images from text.

-

High-Fidelity Motion: The model is designed to generate natural and believable motion dynamics, avoiding many artifacts common in earlier AI video models.

Homepage Wan AI

Homepage Wan AI

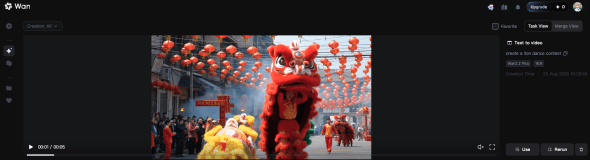

Wan AI Video

Wan AI Video

Frequently Asked Questions

-

What is Wan AI?

Wan AI is an open-source visual generative model from Alibaba that creates video and images from text or image inputs. -

Is Wan AI free to use?

Yes, Wan AI is released under an open-source license, making it completely free for both personal and commercial projects. -

What hardware do I need to run Wan AI?

You can run Wan AI on a consumer-grade GPU with at least 8GB of VRAM, with models like the NVIDIA 4090 being ideal for optimal performance. -

How does Wan AI compare to OpenAI’s Sora?

Wan AI is an open-source alternative that runs locally, offering more creative freedom, while Sora is a closed, commercial model known for its highly realistic and longer-duration video generation. -

Can I use Wan AI for commercial work?

Yes, the open-source license permits the use of Wan AI for commercial purposes without any fees. -

What is the maximum video resolution for Wan AI?

The Wan 2.2 model supports video generation up to 720p resolution at 24 frames per second. -

Does Wan AI require an internet connection?

Once installed and set up on a local machine, Wan AI can be run without an active internet connection.

Tech Pilot’s Verdict on Wan AI

I’ve been tracking the AI video space for years, and the pace of change is staggering. We’ve moved from blurry, short clips to near-cinematic quality in what feels like a blink of an eye. When a major player like Alibaba drops a powerful open-source model like Wan AI, we at TechPilot pay close attention. My goal was to assess if this tool truly democratizes video creation or if it’s mainly for developers and AI researchers.

First, I explored its text-to-video capabilities based on numerous user-generated examples. I looked at prompts like “create a lion dance contest.” My goal was to see how Wan AI would handle a scene with intricate costumes, specific traditional movements, and multiple actors. The result was a mixed but fascinating success. The model absolutely nailed the aesthetics; it generated a clip bursting with brilliant reds and golds, capturing the energetic atmosphere. The general motion of the lion costume was present. However, the details revealed the current limitations of this technology. The dancers’ legs beneath the costume would sometimes appear inconsistently, and the specific footwork of the dance was lost in a more generic bouncing movement. While visually striking, it lacked the cultural authenticity a real video would have. This shows that while Wan AI is excellent at interpreting mood and color, it still struggles with complex, coordinated group actions.

Next, I focused on the image-to-video feature, which I believe is its strongest selling point. We saw examples where users fed it a portrait of a person and prompted “subtly smiling, wind blowing through hair.” Wan AI brought the static image to life without losing the person’s likeness—a notoriously difficult task. The movement was subtle and realistic, not distorted or uncanny. This feature alone is a game-changer for marketers wanting to animate product shots or artists looking to add life to their digital paintings. The learning curve is its main hurdle for mass adoption. While tools like ComfyUI simplify the process, it’s still not the simple “type and click” experience of web-based platforms.

When considering an AI video generator, it’s crucial to weigh open-source flexibility against the polished experience of commercial tools

Top Alternatives to Wan AI

Sora (by OpenAI): Sora is OpenAI’s flagship text-to-video model, known for generating longer, highly coherent, and photorealistic videos. It excels at interpreting complex prompts and maintaining character consistency across multiple shots. However, Sora is a closed model with limited access and will likely operate on a credit-based system tied to a ChatGPT subscription. It’s a better choice for creative professionals who need the absolute highest quality and are willing to pay a premium for it, whereas Wan AI is the superior option for developers, researchers, and users who prioritize cost, customizability, and creative freedom.

Pika Labs: Pika Labs offers a user-friendly, web-based platform with a strong focus on creative editing tools like lip sync, region modification, and camera control. Its pricing is accessible, with a free tier and affordable paid plans, making it great for social media creators and artists. Pika is a better choice for users who want a simple interface and powerful in-app editing features without any technical setup. Wan AI, in contrast, offers more raw generative power and uncensored output but requires a local setup and lacks Pika’s integrated editing suite.

RunwayML: RunwayML is a mature, all-in-one creative platform that provides a vast suite of “AI Magic Tools” beyond just video generation, including advanced editing and effects. Its new Gen-3 Alpha model is a direct competitor in terms of quality. Runway is aimed at filmmakers and professional artists who need a comprehensive toolkit for their entire workflow. It is a better choice for professionals who need an integrated ecosystem of advanced tools and are willing to pay a subscription. Wan AI is the better pick for those who need a powerful, standalone video generation model for free and have the technical skills to run it.

In summary, Pika Labs is best for ease of use and social media content, RunwayML is the choice for professional all-in-one editing suites, and Sora represents the cutting edge of quality.

Final verdict

Wan AI is a landmark release in the open-source AI community. It’s a powerful, versatile, and—most importantly—accessible tool that puts professional-grade video generation into the hands of anyone with a decent gaming PC. While it may lack the polished user experience of its commercial rivals, its performance, cost-effectiveness, and creative freedom are undeniable. For developers, tinkerers, and budget-conscious creators, Wan AI isn’t just a good option; it’s the new standard.